Icii Specs

Icii, the new standard of Source AI

The Nvidia Jetson has become the preferred option for Edge AI. However, more people are continuing to realize that Edge GPUs are not an option for Source AI due to either performance, power, heat, size, or cost constraints.

Icii + FPGA outperforms the Jetson Nano in both performance and power. Icii’s Yeti is in Alpha and Icii’s specs are based on our current AI model implementations. For comparison, we will use MobileNet v2 (MV2). MV2 needs ~300 million multiply-accumulates (MACs). Icii optimizes memory bandwidth and scheduling to achieve maximal throughput with minimal power. Therefore, based on our current implementations and conservative estimates, assuming the following:

- Xilinx Artix-7, XC7A100T

- 128 core DSPs, current demos use 32

- 350 MHz DSP clock, current demos use 100 MHz

- 2 8-bit multiplications per DSP, current demos use 1

- 128 bits per DDR read/write, current demos use 32 bit or 8 bit

- 15% overhead

| Icii | Jetson Nano | |

|---|---|---|

| Inferences Per Second | 141 | 64 * |

| Power Consumption | 2 – 3 W | 7 – 10 W ** |

| Size | 3.1 sq in | 4.8 sq in * |

| Fan Needed | No | Yes |

* https://developer.nvidia.com/embedded/jetson-nano-dl-inference-benchmarks

** https://www.xilinx.com/support/documentation/white_papers/wp529-som-benchmarks.pdf

** https://www.xilinx.com/support/documentation/white_papers/wp529-som-benchmarks.pdf

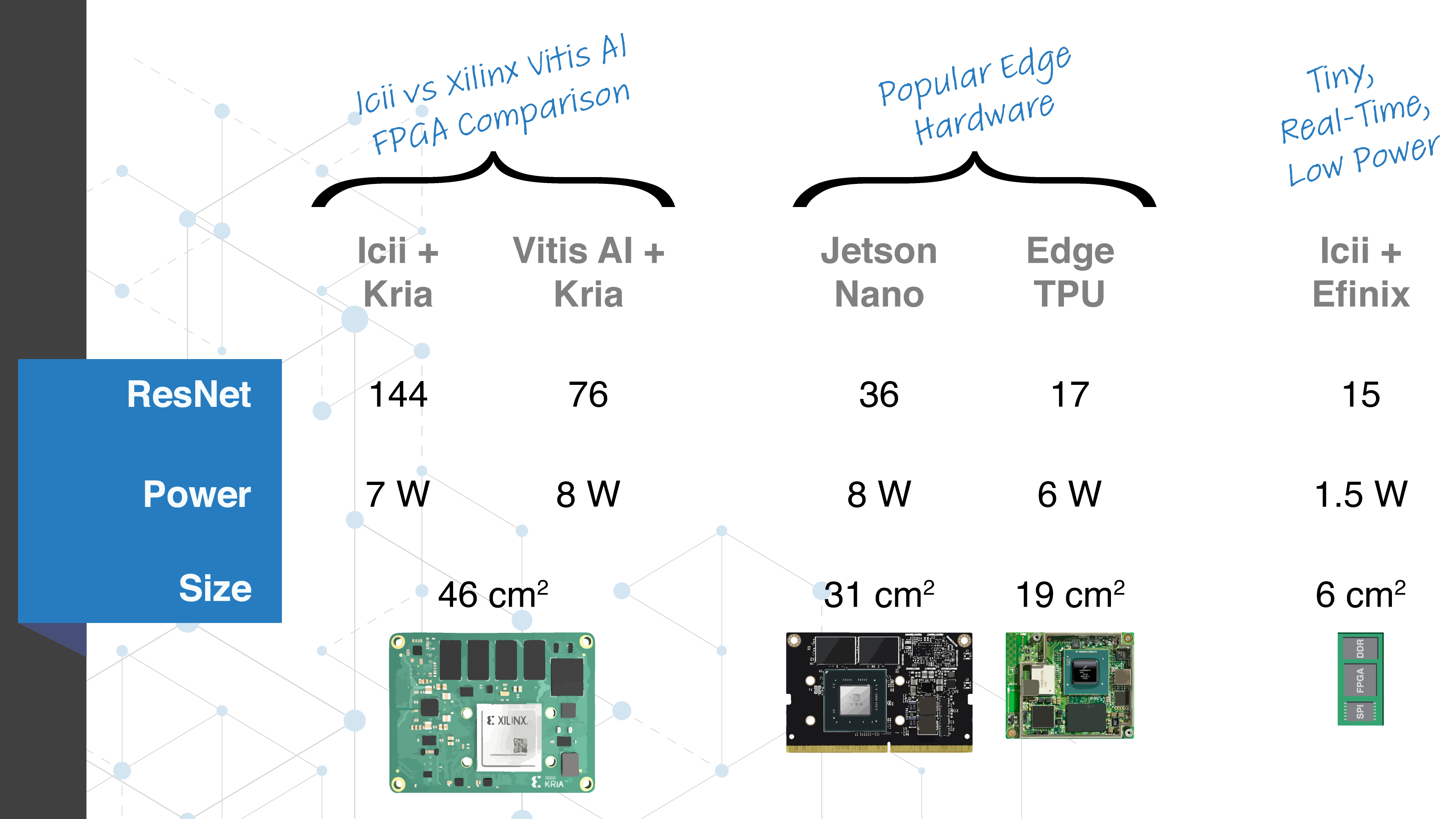

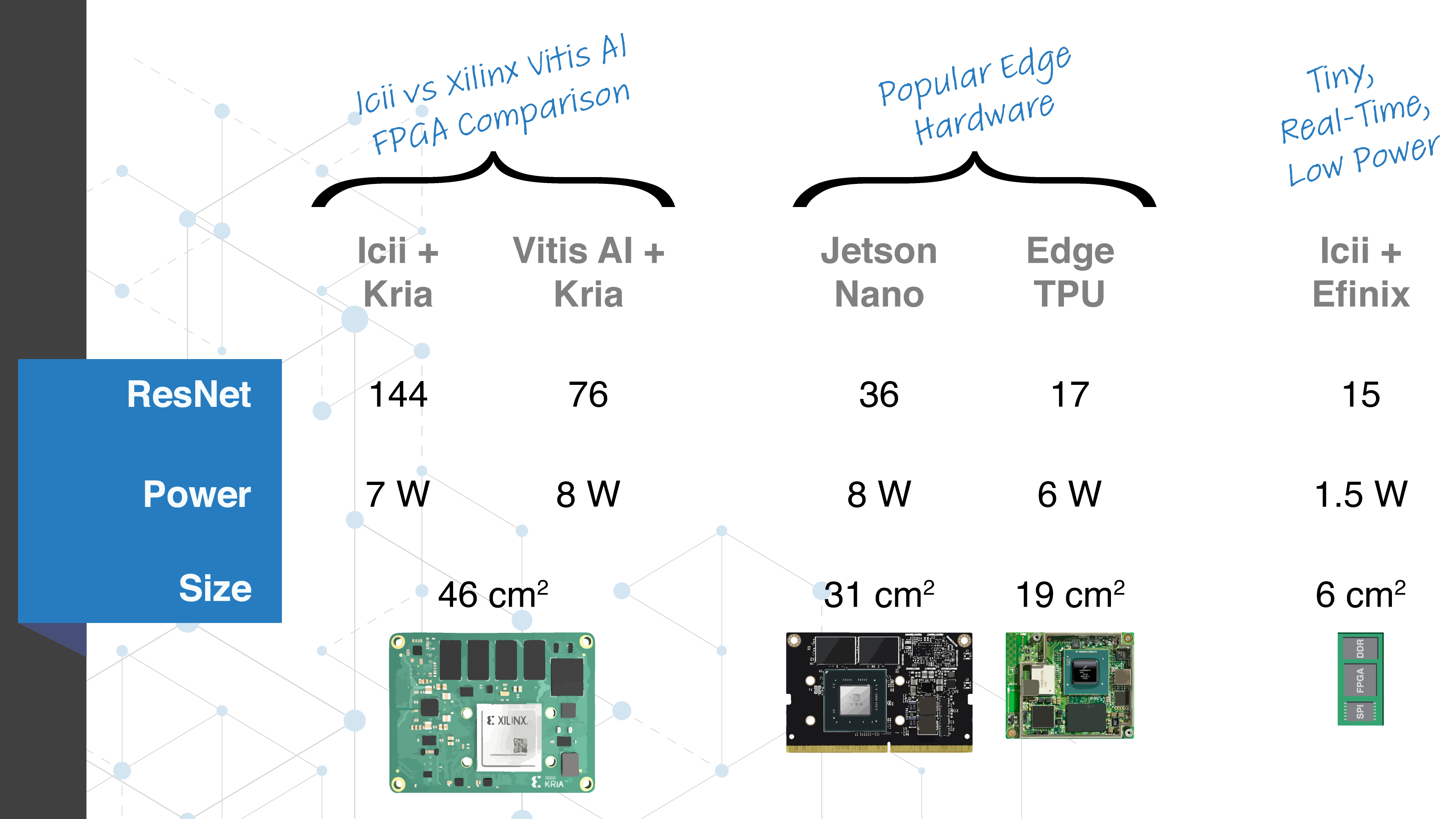

To compare Icii on two different FPGAs, the Xilinx Kria and an Efinix FPGA, Icii projects the following specs: